Pretty Messed Up

Late night boredom leads to a shocking discovery

Matt Barnette

3/26/20244 min read

It was late. The kind of late where the world quiets down, and you can almost hear the hum of the earth spinning, and I was bored. Since I still wasn’t tired, I decided I wanted to see what all the fuss was about with the generative AI. Midjourney specifically. It was all I had been hearing about lately. So, I signed up. Immediately as I explored my new discord app was to try to prompt it for nonsensical things that you would never see in real life. I don't remember specifically but just dumb silly stuff. Somewhere in between dumb and silly I had an odd thought.

I was curious what I would get if I prompted it with a single word. And not just any single word, but a feeling or something the AI should have no concept of like joy or sadness or empathy or excitement. I was sure that it would be interesting to kind of peer into the mind of the AI and see what assumptions it made or what biases it may have, but I wasn't trying to find something wrong with it. I was looking for the odd bit of unique inspiration that only comes from the unexpected. I was hoping to be wowed by the responses I got for my equally odd prompts, and I guess it delivered on that. Just not how I expected.

I don’t know what I expected to be honest, but not this.

I’m being intentionally vague. I apologize. I'll jump to the interesting part.

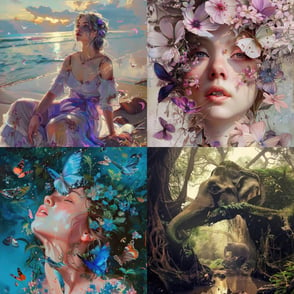

So, its been about an hour or so and I had been sending through random words that came to mind, effectively wasting that entire time period, and I was running out of steam. I planned to head to bed soon but I decided to keep going a little further, and that’s when I prompted the words “beautiful” and “pretty” separately. The first results for each had an image or two that were really interesting so I sent the two words through again. I had done this several times. If I saw something I thought was interesting or worth exploring I would always send it through at least once more. Sometimes more. But after these two came back a second time and I scrolled past all 4 responses (16 images) on the screen, I noticed something.

The images for the first time throughout this experiment were all of the exact same thing. As I mentioned, I had repeated words a few times and since the words I sent through were intentionally vague, the output was largely varied and different each time. It had never focused on one subject before this. So, part of me was curious if that's all that it would produce when prompted with beautiful or pretty?

The short answer is yes which you could argue is a problem by itself. But I decided to go for an even 10 each after I was too far down the rabbit hole to turn back. That means 80 images were returned to me and of those 80 images, 79 were exclusively white women. The outlier was a couple of elephants.

Now, I’m not one to dive into the murky waters of bias lightly; it's a heavy topic, laden with complexities and sensitivities. But this... this was too glaring to ignore. It bothered me, not just on a level of fairness or representation, but on the very foundation of truth and accuracy. If an AI like Midjourney, which should be unbiased since the models are trained without guidance, leans so heavily towards a narrow definition of beauty, what does that say about the data it's trained on? What about the dataset it was given would make it equate beauty with that particular group.

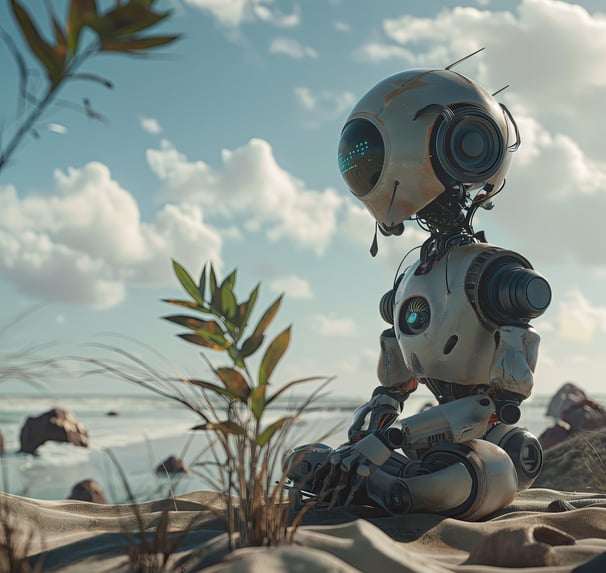

ChatGPT, the so-so story telling cousin in the AI family, spins yarn with words, unaware of the worlds it’s words attempts to create. But Midjourney, with its eye for images, should have a lens that catches these patterns, right? If it's learning from its outputs, why didn't it see the monotony, the lack of diversity, the narrow alley it was walking down? I fail to think of any person on the planet that wouldn’t spot that discrepancy immediately.

This isn't just about aesthetics or social justice, though that’s part of it for sure, but it’s also about the integrity of AI and the patterns it perpetuates. We need a system that doesn't just replicate, but reflects, analyzes, and corrects its course. A system that understands the weight of its creations, ensuring they're as diverse and multifaceted as the world they draw from. What does that say about the patterns we are deliberately feed into it. One could argue that to filter or limit it's dataset would be to provide it with an inaccurate view of the world, but to that I say there are some things that shouldn't be included in that view if we ever want to move past it. If we don't want these patterns to persist, then doing nothing and letting the data speak for itself isn't good enough.

It reminds me of a Greg Giraldo bit about how the news will outline potential targets for terrorist groups, and it seems like half the time they are giving out ideas the bad guys haven't thought of yet. We did that with ChatGPT. If we believe it will grow into AGI, and we are all worried about that tech trying to extinguish us, well I wonder where it got that idea?

All in all, I didn’t start this experiment to stir the pot or to cast aspersions. It was mere curiosity, a flicker of a thought about how abstract concepts are visualized by an entity devoid of human bias and without instructions in it's training nor much really from me outside of concepts or emotions that would be difficult for an AI to relate to. Yet, here I am, discussing a discovery that saddens me, a pattern that should not exist in today’s society nor in a tool as powerful and influential as AI.

We're standing at a crossroads, where technology can either cement the biases of yesteryears or break free, creating a tapestry that truly reflects the diversity of human experience. It’s a hefty responsibility, and one I hope we're up to the task of addressing. Because in the end, the patterns we create, in AI or otherwise, are the legacies we leave behind.